End-to-End Signal-Aware Direction-of-Arrival Estimation Using Weighted Steered-Response Power

J. Wechsler, W. Mack and E. A. P. Habets

Published in Proc. European Signal Processing Conf. (EUSIPCO): 41–45, 2022.

Click here for the paper.

Contents of this Page

- Abstract of the EUSICPO 2022 paper

- Poster for the ASC Forum 2022

- Example Audio: Source Extraction from a two-source Mixture

- Presentation Recording for EUSICPO 2022

- Demonstration Video, recording of a real-time demo application

- References

Abstract

The direction-of-arrival (DOA) of acoustic sources is an important parameter used in multichannel acoustic signal processing to perform, e.g., source extraction. Deep learning-based time-frequency masking has been widely used to make DOA estimators signal-aware, i.e., to localize only the sources of interest (SOIs) and disregard other sources. The mask is applied to feature representations of the microphone signals. DOA estimators can either be model-based or deep learning-based, such that the combination with the deep learning-based masking estimator can either be hybrid or fully data-driven. Although fully data-driven systems can be trained end-to-end, existing training losses for hybrid systems like weighted steered-response power require ground-truth microphone signals, i.e., signals containing only the SOIs. In this work, we propose a loss function that enables training hybrid DOA estimation systems end-to-end using the noisy microphone signals and the ground-truth DOAs of the SOIs, and hence does not dependent on the ground-truth signals. We show that weighted steered-response power trained using the proposed loss performs on par with weighted steered-response power trained using an existing loss that depends on the ground-truth microphone signals. End-to-end training yields consistent performance irrespective of the explicit application of phase transform weighting.

Poster for the ASC Forum 2022

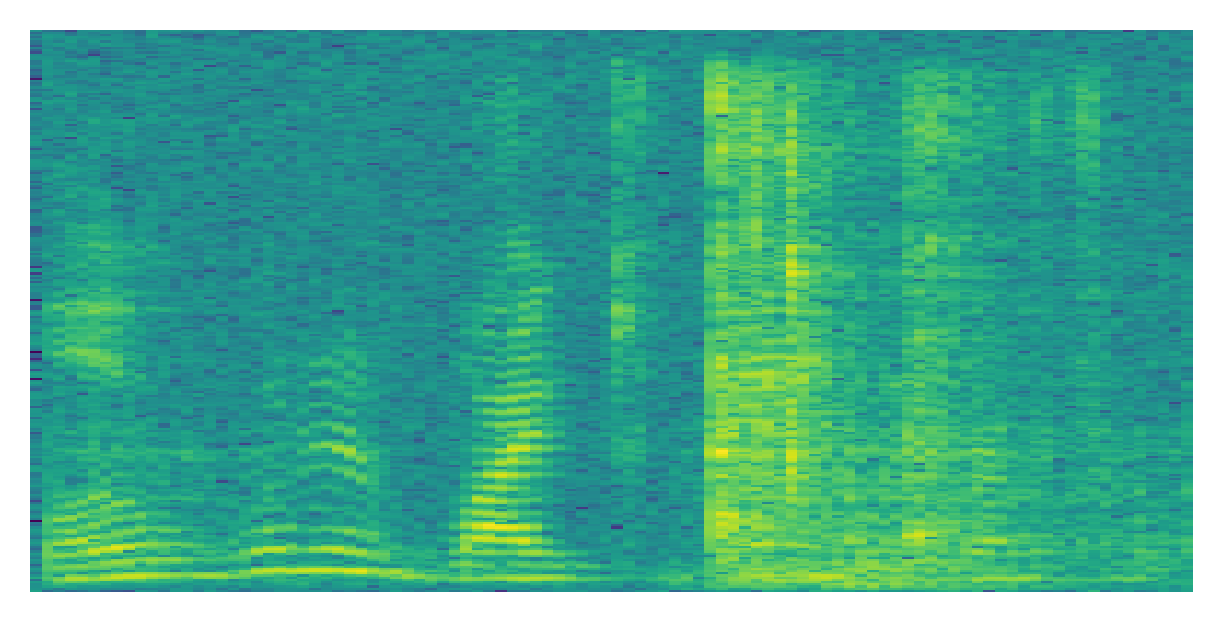

Example Audio: Source Extraction from a two-source Mixture using a Phase-Sensitive Mask (PSM) [1], Spatial Pseudo-Spectrum (SPS)-based Masking [2], and the Proposed Masking

Note that the SPS-based masking [2] and the proposed masking are not devised for source extraction.

EUSIPCO 2022 Presentation

Demonstration

In the above recording of a real-time demonstrator for signal-aware DOA estimation, you can observe the performance of the different approaches from the EUSIPCO paper (publication pending) when applied frame-by-frame. First, Julian Wechsler and Wolfgang Mack explain the setup of the video one after the other (localisation while only a single source is active; unfortunately, they misjudge the angle at which they are standing relative to the array), before the last part of the explanation takes place while a moving interference source is active in the background.

Please note: The training and the results according to the paper are based on 100 time frames, the demo shown here is based on frame-wise estimations - the output of the methods is thus degraded in quality in that the maxima used for estimation are noisier and less clear. This is reflected in very noisy/broad estimates, and especially also in the fact that baseline masking cannot suppress the activity of the noise source alone (towards the end of the video). To compensate for this condition mismatch, a moving average over the last 5 estimates and a threshold of 0.85 for the spatial pseudo-spectrum are included in the demo.

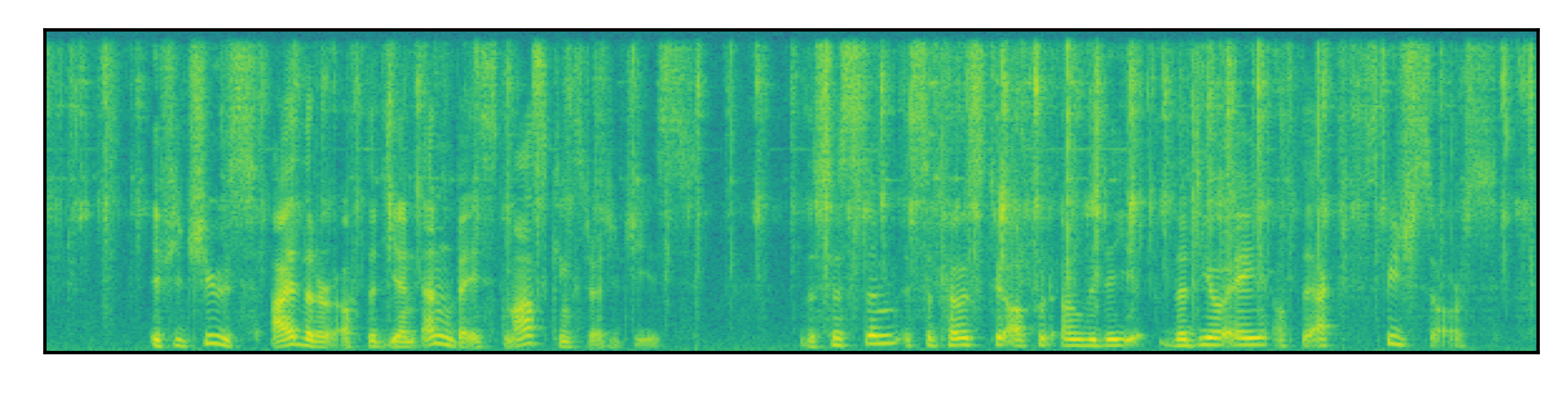

What does the DNN attend to in our recording?

References

[1] Z. Wang, X. Zhang, and D. Wang, “Robust speaker localization guided by deep learning-based time-frequency masking,” IEEE/ACM Trans. Aud., Sp., Lang. Proc., vol. 27, no. 1, pp. 178–188, 2019.

[2] W. Mack, J. Wechsler, and E. A. P. Habets, “Signal-aware direction-of-arrival estimation using attention mechanisms,” Computer Speech & Language, vol. 75, p. 101363, 2022. [Online]. Available: https://doi.org/10.1016/j.csl.2022.101363